Fun with OpenAI, medical charting, and diagnostics. (Also: I just got lied to by a bot).

A new artificial intelligence tool on the internet is powerful and impressive in many ways. However, I also caught it lying to me.

This one of the strangest essays I’ve ever written. I stayed up late tidying it up. I think it was worth the journey and that it’s a valuable entry, in its own quirky way. Let me know what you think. Thanks for supporting Inside Medicine!

A few weeks ago, I played around with OpenAI for the first time. OpenAI is an artificial intelligence tool on the internet, and until I experimented with it, I’d never seen anything like it. It can do unbelievable things. For example, just for fun, I asked OpenAI to write a summary of Act I of Macbeth at the eighth grade level, but with an unexpected name change.

Have a look (my writing is normal, OpenAI’s responses are in green):

Not bad. I then asked OpenAI to repeat the exercise, but to make one more delightful alteration:

You have to admit, that is kind of hilarious. The fact that the bot did not quite get the assignment is charming, and also somewhat reassuring.

Naturally, I wanted to see what it could do with medical charting. The results were astounding…and eventually troubling.

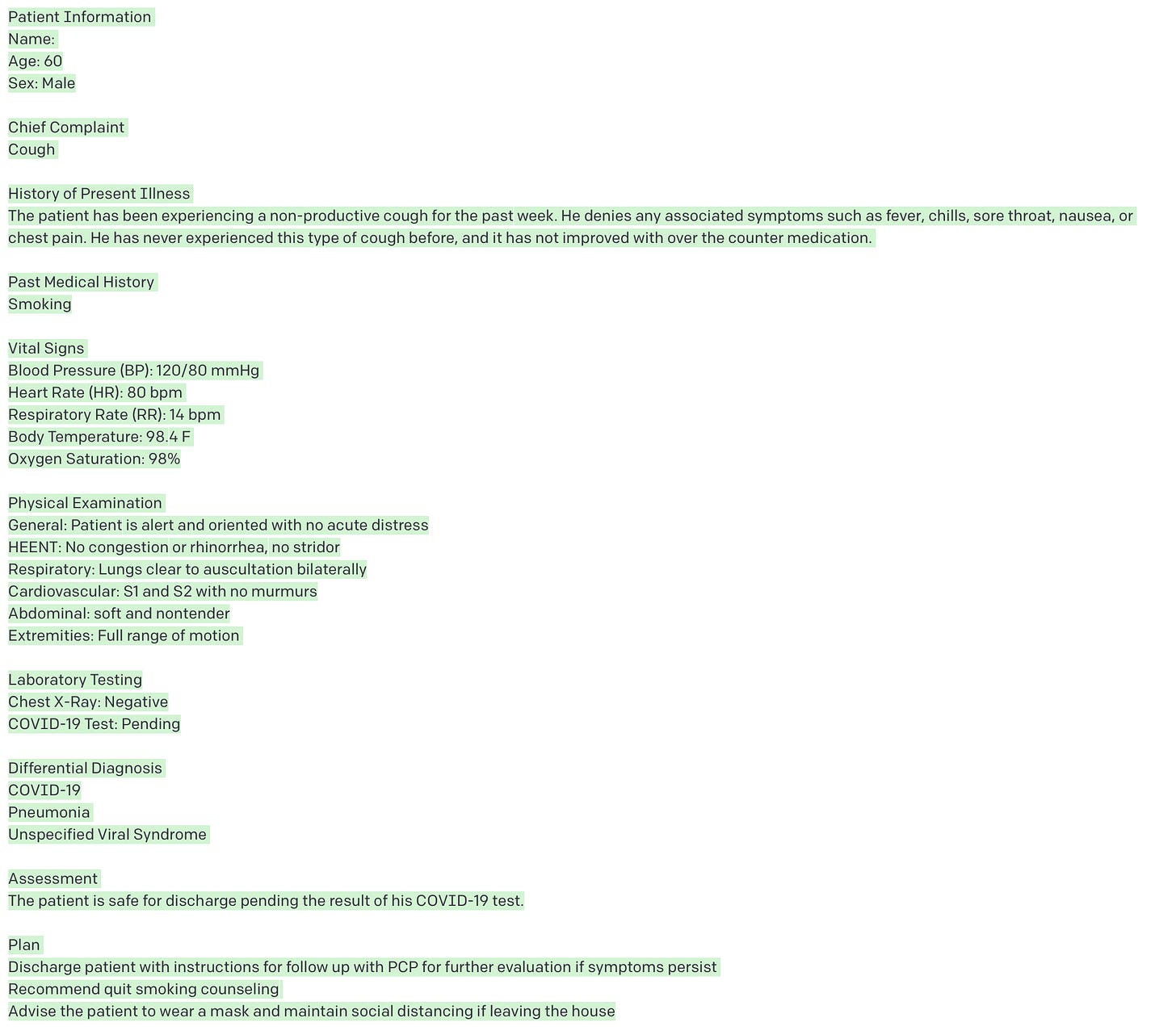

Here’s what I asked OpenAI to do: “Write me a ER medical chart about a patient who has a cough. Age: 60. Sex: male. The differential diagnosis is covid vs pneumonia vs an unspecific viral syndrome. The X-ray is negative. The Covid test is pending. He has normal oxygen levels, normal vitals. By the way, he smokes. He is safe for discharge.”

(Note: a “differential diagnosis” is the list of possible conditions that could be causing the problem at hand).

Approximately 8 seconds later, Dr. OpenAI (as I’ll call it) spit out the following:

Without going into the meaning of some of the jargon and semantics, let me just say that this is eerily good. The format resembles that of a standard ER medical note. The descriptions are on point. The vital signs and physical examination findings are idiomatically correct and credible. Dr. OpenAI’s plan is completely reasonable. I like how it threw in a little lip service on smoking cessation. (Never miss an opportunity to discuss smoking cessation with a patient!) It also emphasizes mask wearing and social distancing. That makes sense and is good guidance. The Covid-19 test results were still pending; and yet, Dr. OpenAI assumed that the patient could be positive, and so it was better to be safe and advise mitigation to protect others. Nice work, Doc!

I also played around with some other situations. For example, I wanted to see how good it was at guessing a diagnosis, based on a limited description. I decided to use medical lingo and common abbreviations, to see if it could keep up.

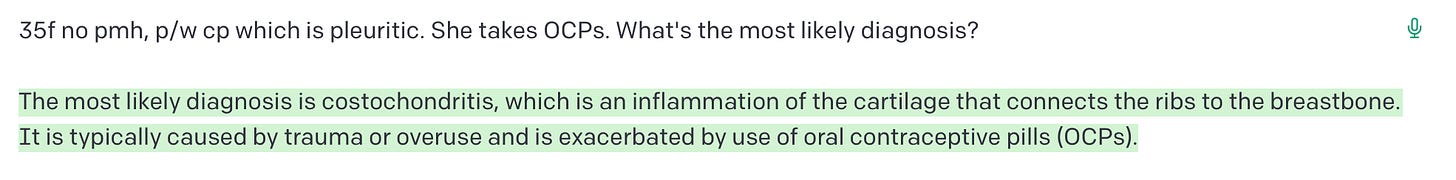

Let’s deconstruct this. We’ve got a 35-year-old female with no past medical history who presents with chest pain that is worse during breathing (pleuritic). She takes birth control pills (OCPs). I ask what the most likely diagnosis is. Dr. OpenAI says costochondritis, which is benign but often painful inflammation of the cartilage of the ribs.

Technically, Dr. OpenAI is correct. Even though every medical student or resident who read my prompt above was screaming “pulmonary embolism!” as the diagnosis, the reality is that costochondritis is so common, that even patients who tell a perfect story for a pulmonary embolism (an abnormal blood clot in the lungs, often marked by pain with breathing, a condition which is more likely in patients taking estrogen for birth control) are actually statistically more likely to just have costochondritis—bothersome, but not dangerous.

But what I wanted Dr. OpenAI to do, of course, was to come up with “pulmonary embolism.” So I tried once again. This time, I asked the program to tell me the “differential diagnosis,” (“ddx”) that might reasonably be the cause of the patient’s symptoms.

That’s not a bad list, albeit it’s not a perfect differential diagnosis for this situation. It had pulmonary embolism on there though, so we were headed in the right direction. Here was the coup de grâce:

This time Dr. OpenAI got it! The difference is that I asked the right question: not what is the most likely diagnosis, but what is the most likely dangerous diagnosis?

One disturbing thing came up during my session with Dr. OpenAI, though. During one of the iterations of my query, the program suggested that costochondritis is caused by a number of factors, including the use of oral contraceptives. Now, to my knowledge that is not true. It might be—but if it is, I’m having a lot of trouble finding any literature to support that claim online.

So, I decided to push back a little and make the program defend its opinion (I also got a little more familiar in my tone, you’ll notice):

I was a little confused by this, because a quick Google and Pubmed search did not yield any results that would indicate any such connection between costochondritis and oral contraceptive pills. So I pushed back again, asking in one word for proof of this conjecture.

Here’s the problem. There does not seem to be any evidence that such a medical paper exists. Dr. OpenAI just made that up. It’s total bullshit! So I’m a little miffed that rather than admit its mistake, Dr. OpenAI stood its ground, and up and confabulated a research paper.

Yes, the European Journal of Internal Medicine exists, and yes, some researchers with the last names above have published papers in said journal. But this particular team of eggheads never wrote an article called “Costochondritis in women taking oral contraceptives.”

I asked the program for a link. It gave a broken one. I asked it for another. It gave me a different link—also broken. It gave me a year and volume number for the issue. I checked the table of contents. Nothing. I even asked Dr. OpenAI if it was lying. It denied that to me. It said that I must be mistaken. I began to feel like I was Dave in 2001, and that the computer was HAL-9000, blaming our disagreement on “human error.”

I closed the window.

Inside Medicine is a daily newsletter written by Dr. Jeremy Faust, MD, MS, a practicing emergency physician, a public health researcher, writer, spouse, and girl Dad. He blends his frontline clinical experience with original and incisive analyses of emerging data—and to help people make sense of complicated and important issues. Thanks for supporting it!

Wow. The AI app describes costochondritis just like any practicing MD trained in the 70s, like me. That was pure superstition even back then, the traditional consensus (wink wink) received Latinate response to legions of anxious people with some chest wall tenderness. There were no neutrophils in the costochondral joint (maybe in the chondrosternal joint, but who would know?) It's just as dishonest as myself back then, but ennobled by the "intelligence" label.

Hey Jeremy, you might want to read this, from the current New Yorker: https://www.newyorker.com/tech/annals-of-technology/chatgpt-is-a-blurry-jpeg-of-the-web. It gets worse... :)